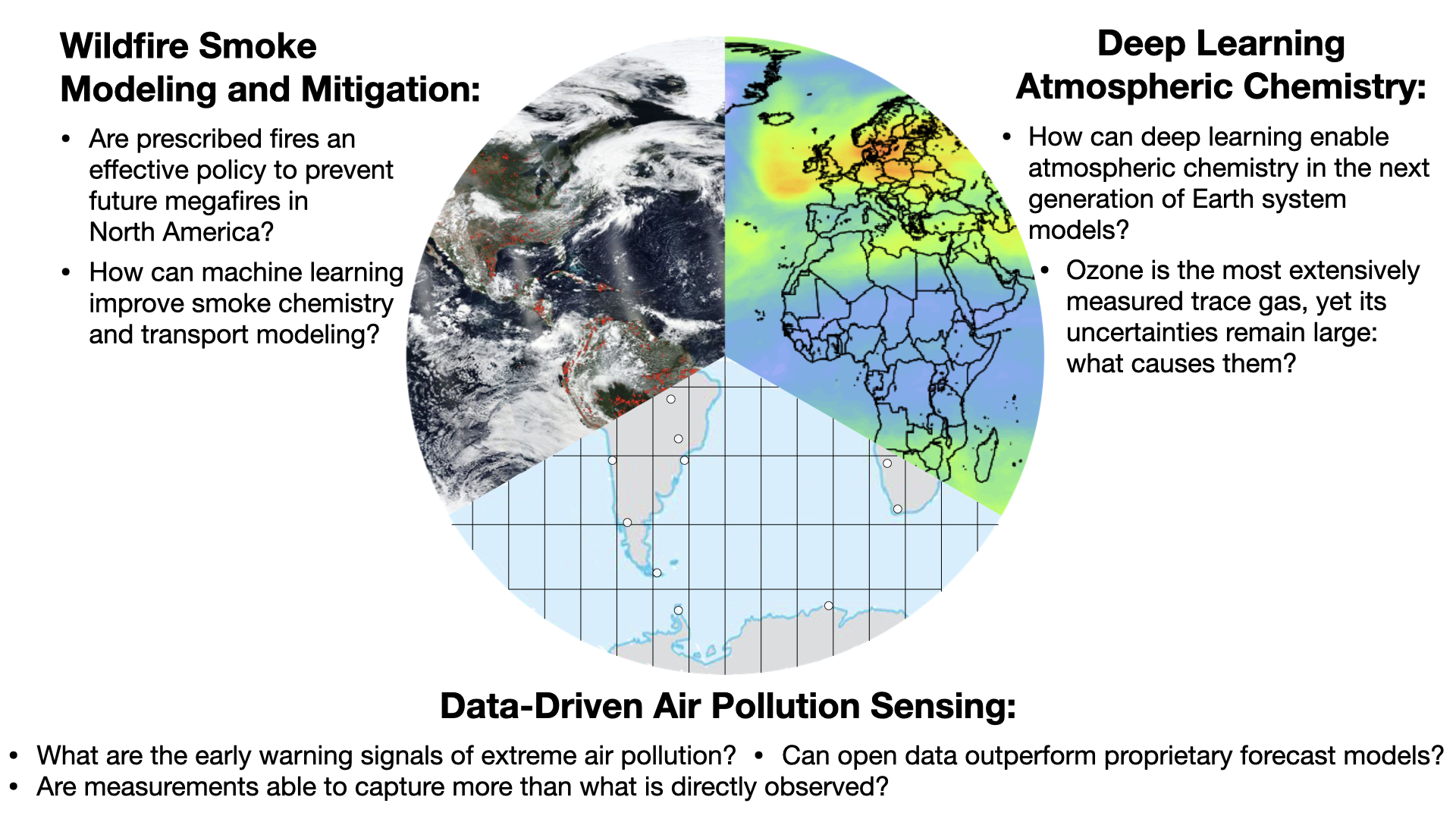

Research Questions

We work to advance our understanding of air quality and its intersections with human and environmental systems. We are particularly interested in leveraging innovative data science and machine learning techniques to tackle complex problems in air pollution modeling and wildfire smoke management. We look to approach problems from unique perspectives, uncovering insights through the exploration of unconventional methods and data sources.

Research Areas

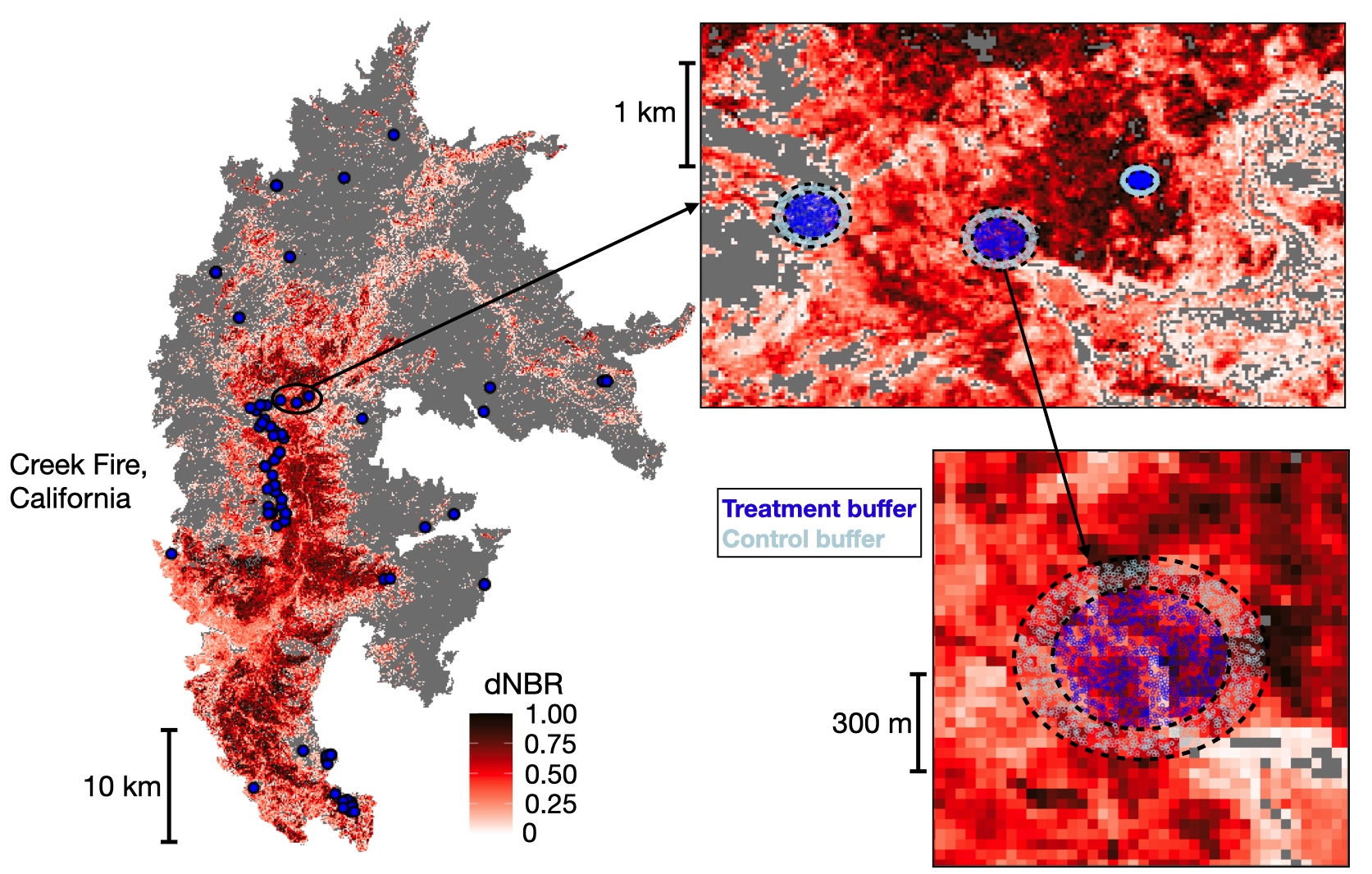

Wildfire Smoke Modeling and Mitigation

Due to a warming climate, a legacy of fire suppression, and expanding development into the wildland-urban interface (WUI), the western US has experienced a recent rise in extreme wildfire seasons. Wildfires not only damage ecosystems and infrastructure but also degrade air quality and pose serious public health risks from smoke exposure. Prescribed ("Rx") fire is often promoted as a policy solution in the western US, yet its use is limited in practice and few studies have evaluated its effectiveness against wildfire impacts. Our research is motivated by key gaps in our understanding: (1) we lack observational and modeling systems to accurately project how scaling Rx fire useage would affect air quality and health outcomes in the western US; and (2) the efficacy of past Rx fire treatments remains poorly quantified across varied landscapes and fire seasons. It is unclear whether expanding Rx burning will reduce wildfire risk or simply add to the smoke burden without preventing future fires. Some of our recent work shows that Rx fire treatments, while modestly effective, are frequently least successful in the WUI, a central focus of wildfire policy. Such findings highlight the limitations of current wildfire strategies and underscore the need for data-driven, policy-relevant approaches to guide the proposed expansion of Rx fire.

Publications: Kelp et al. (2025) AGU Adv., Kelp et al. (2023) Earth's Future

Mentored Publications: Chung et al. (2025) ES&T,

Co-Authored Wildfire Publications: Feng et al. (2025) PNAS, Qiu et al. (2025) Nature, Qiu et al. (2024) ES&T, Liu et al. (2024) Int J Wildland Fire

Points for Policymakers: Prescribed fire research brief

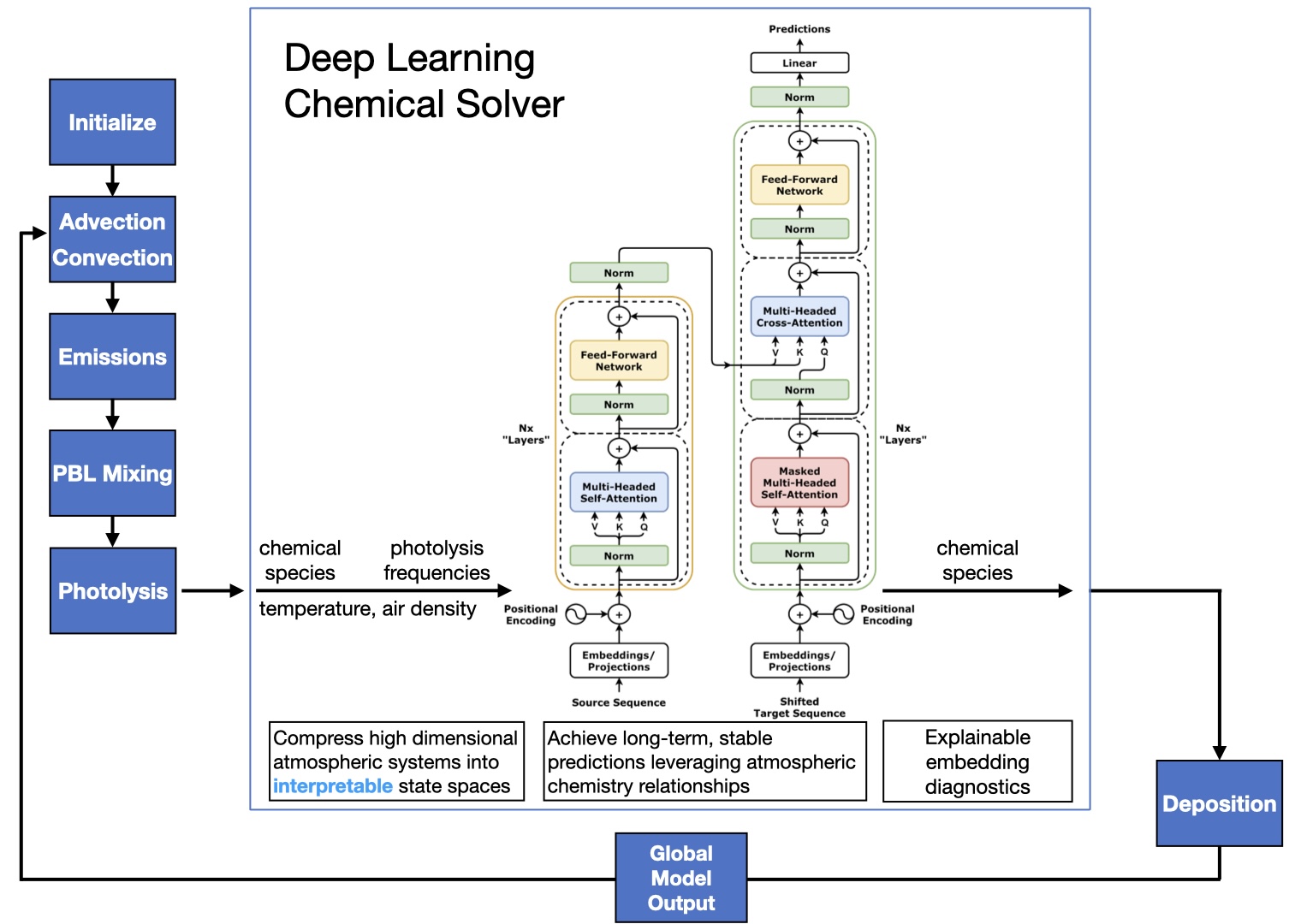

Deep Learning Atmospheric Chemistry

Ozone is a pollutant that harms human health, damages vegetation, and acts as a short-lived climate forcer in the upper atmosphere. Despite being the most-measured trace gas in our observational record, global atmospheric models show wide disagreement in ozone’s spatial and temporal patterns and trends. The modeling of tropospheric ozone expresses the ultimate skill of a global atmospheric model, as bias can be driven from any physical process: emissions, chemistry, stratosphere-troposphere exchange, or boundary layer mixing. Deep learning seems well-suited for atmospheric chemistry modeling because it can learn complex, non-obvious interactions in air pollution data and accelerate computations of chemical simulations. Our past work explored how deep learning can emulate and replace computationally expensive components of global atmospheric models for fast, stable simulations. Our current research probes what foundation models actually learn about atmospheric chemistry and how they can complement process-based models. We develop architectures that improve chemical generalization, use AI to transfer skill from data-rich regions to the rest of the globe, and work toward physically consistent Large-X Models (LxM) that blend data-driven and mechanistic components to better diagnose ozone bias.

Publications: Kelp et al. (2022) JAMES, Kelp et al. (2020) JGR: Atmos, Kelp et al. (2018) ArXiv

Future Priorities of AI in Air Quality: Highlight Paper on AI for Tropospheric Ozone Research (2025), NeurIPS AI4Science Dataset Competition Winning Paper (2025)

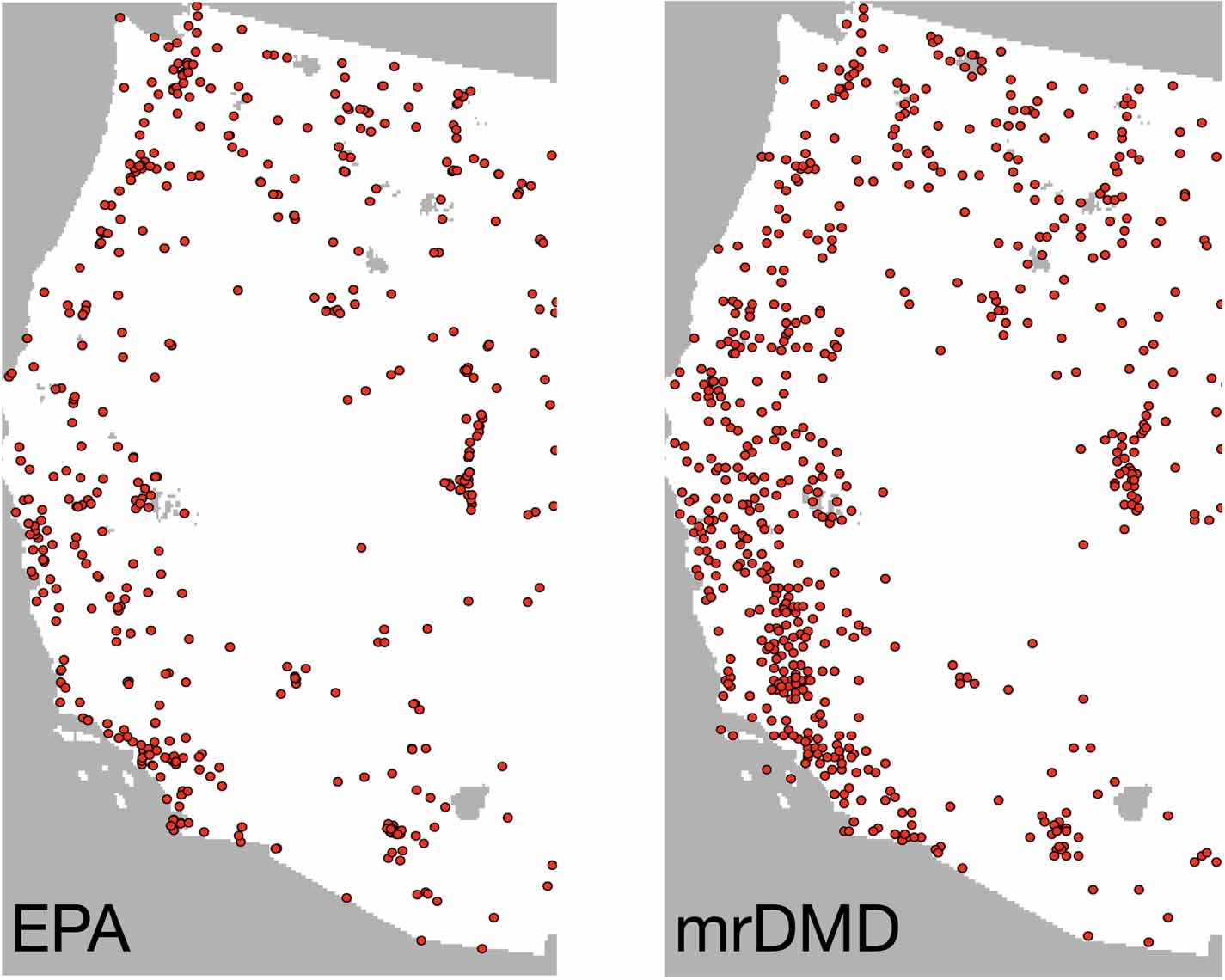

Data-Driven Air Pollution Sensing

Despite major investments in air quality (AQ) monitoring, existing sensor networks often fail to capture extreme air pollution. We use data-driven methods to improve the design of sensor networks, air quality forecasts, and environmental early warning systems. In one national scale study, we applied compressed sensing algorithms, a signal processing method that uncovers important spatiotemporal patterns found in data, to determine optimal AQ sensor locations based on recent pollution trends. This analysis revealed major gaps in the current EPA's monitoring network across the western US, particularly in regions affected by wildfire smoke. In a related study, we incorporated equity constraints into the sensor network optimization to improve coverage in historically segregated neighborhoods in cities such as St. Louis and Houston. These approaches provide a foundation for rethinking how we design AQ monitoring networks to better capture extreme events and ensure more equitable coverage. At the same time, commercial platforms increasingly deliver AQ forecasts through proprietary systems, raising concerns about transparency and public accessibility. These systems are likely to become more prevalent in the coming decade due to the rapid commercialization of environmental data and advances in AI and cloud computing. In response, our research is guided by a set of core questions: What are the early warning signals of extreme air pollution (fires, inversions, smog)? Can open data outperform commercial forecasts?

Publications: Kelp et al. (2023) GeoHealth, Kelp et al. (2023) ERL, Kelp et al. (2022) ERL

Co-Authored Sensor Publications: Kawano et al. (2025) Sci. Adv., Yang et al. (2022) ES&T